We count the year 2012, cloud computing and cloud storage has arrived, and still increasing. We expect all our data available 24/7 and every device is online and grants us access to the internet -

The Big Big Cloud of Everything.

Metadata is the description of the data. So what does it mean?

Let me give you an example: I provided computer-support for many of my friends and one friend in particular used to call me almost daily to resolve some of his problems - and I used to tell him "write things down". Now, he forgot logins and passwords of services he used, also root passwords of the machines I installed and I said "write down the password!", and he did: on a piece of paper, and he wrote down "hello12KB" (as example):

Pieces of paper with passwords on them: Data without Metadata |

Weeks passed by, and you guess right, there were several pieces of paper with words on them written on . . . which password belonged to which login, which computer or which web-service? The data which described the data was NOT written down - and you assume correct, without the metadata your data becomes useless.

"My Thesis 2.doc" or "Test.doc", "New Test.doc", yes, those are the filenames which should describe what the data is.

I personally name files with some relevant metadata, e.g. including the date "2012-07-10" or so, like "My Thesis 2012-07-10.odt"

Bad habit is to name files like "July 10, 2012" because no simple sorting by filename gives a relevant order, month names sorted alphabetically doesn't bring you anything. Likewise "07-10-11" is even bigger non-sense, what is the year, month and day here? Right, you write it always the same way, but others have to guess? No matter how smart you name the files, you can't embed all what you really want - filenames would become unreadable.

The use of directories or folders has helped a bit:

- Thesis 2012/

- Notes/

- Sketches/

- Mindmap By S. Sturges 2009.pdf

- Human Psyche 2012-07-10.odt

- Human Psyche 2012-07-10.pdf

Files of importance are fully qualified, means, even without context (location in the filesystem) reveal their identity. Disposable files, like text-file with URLs or notes have the same filename, yet, depending on location show the relevancy by their association or context only. But this isn't really covering metadata at the level it should be!

Metadata has been neglected hugely, because it is a pain to preserve, obtain or (re-)create.

I addressed this challenge with Universal Annotation System (UAS)  but I admit, I hardly use it - it is not truly integrated so well in the system, it is just an add-on, but it works on Linux, Windows (CLI/Cygwin

but I admit, I hardly use it - it is not truly integrated so well in the system, it is just an add-on, but it works on Linux, Windows (CLI/Cygwin  /Perl

/Perl  ) and MacOS-X (CLI) - no desktop interface yet made (which I should do).

) and MacOS-X (CLI) - no desktop interface yet made (which I should do).

MP3

by default supports several metatags: title, artist, album, year, genre, even the cover photo is preserved when you copy the mp3 file. You need special software to manipulate the metadata.

Every webpage has a

URL

and a title, aside of the content itself. The URL is like the path and filename on your local computer, but the title is most useful metadata. One could argue the entire markup of

HTML

gives more information, but HTML has been a mix of design and markup content and now is a complete mess so to speak: <b>bold</b> means what? Something is important or just rendered

bold? Is <strong> more meaningful? I guess you get my point: design and true markup distinction has been blurred and due to this unfortunate circumstance a lot of metadata lost even at the moment of data creation it was existant.

The metadata in

JPEG

photos are actually done well, thanks to

EXIF

- which contains most important metadata, the shutter time of the photocamera, time & date (even though often timezone is unknown) and often now also GPS based location. Needless to say, many graphic programs do destroy the EXIF metadata, overwrite it because it's no longer able to contribute - this is due the ignorance of the developers and the limitation of the metadata format.

Before I can jump on the solution, let me look closely to the problem itself: the way we store data. How can we even put metadata to data? The filename convention is limiting, extend existing file-structure is . . possible, when we all would agree any file-format being like a XML  or alike structured file, in which we could put as many metadata fields we like, followed with binary data itself, for example:

or alike structured file, in which we could put as many metadata fields we like, followed with binary data itself, for example:

<xml>

<title content="DSC0001"/>

<date content="2012/07/10 15:10:07.012" timezone="+1:00"/>

<location longitude="20.1182" latitude="8.3012"

elevation="800.23" celestialbody="Earth"/>

<annotation type="audio/mp3" encoding="base64">...

....</annotation>

<revision date="2012/07/12 10:05:01.192">GIMP-1.20:

brightness(1.259),

contrast(2.18),

rgbadj(0.827,1.192,0.877)</revision>

<annotation type="image/jpeg"

encoding="binary" length=50982>....

</annotation>

</xml>

that would be a photo taken with a photo-camera, with some audio attached (describing or recording some sound) and then altered with GIMP.

Technically such a transition could be made with tampering with the

open() call (opening a file) in the Operating System (OS) and related low-level file access libraries, thereby and skip over the XML for all applications, and

openXML() which opens the file including the XML chunk and parse the header. Important would be, that file-copy operations would use

openXML(), where all other existing programs, e.g.

GIMP

or Photoshop still would read an existing JPEG using a tampered

open() which skips the heading XML chunk.

Once this would be done, we could easily start to attach metadata to our data, since it would be integrated to the existing file.

I realized, all other ways to attach metadata externally provides problems to keep it properly attached - only in a tightly supervised system you can ensure metadata remaining tied to the data.

First of all the title has to be given, so the filename could be anything, the title is the most relevant piece of metadata.

Further, date & time and time should be as exact as possible (not just seconds, but milliseconds or microseconds).

Further, location of the creation of the data.

Further, all alterations done to the file. One could even store the delta (diff) in the metadata so the revision could be undone independent of the filesystem - that means, a file can be transfered among filesystems with its own revision history embedded.

<revision

date="2010/07/08 09:10:13.1823" timezone="+1"

comment="Changed color from red to blue"

type="data/diff"

encoding="base64">7sn38vma85...26743abf738</revision>

Let me use the above folder example, and make it ONE file:

<xml>

<title content="Thesis 2012"/>

<date content="2012/07/10 15:10:07.012" timezone="+1:00"/>

<location longitude="20.1182 latitude="8.3012"

elevation="800.23" celestialbody="Earth"/>

<annotation title="Sites" type="text/html">

<a href="http://important.com/link/to/some/data.sqldump"

>study 1996-01-20 raw data Prof. Studer (Vienna)</a>

</annotation>

<annotation type="text/odt"

encoding="binary" length="125162"

>....</annotation>

<revision

date="2010/07/08 09:10:13.1823" timezone="+1"

location.longitude="20.1182" location.latitude="8.3012"

location.elevation="800.23" location.planet="Earth"

type="data/diff"

encoding="base64">7sn38vma85...26743abf738</revision>

</xml>

Features:

- All notes are included, e.g. as HTML

- Revisions either are stored within the odt, or as <revision>.

this way we can trace changes, view or undo changes even at a much later time - still have the notes attached to the original research.

Currently (status 2012/07) only MacOS-X does the desktop search right, all data is indexed at storage time, there is no post indexing. Windows 7 and Linux are still a mess regarding desktop search, you have to fiddle around which data gets indexed, or you are provided with the worst kind of searching: searching a string in all files means opening all files and read them all - in the age of terrabytes (~1012 bytes) harddisks this is a matter of several hours to even days, depending on the reading speed. It is incredible that so little attention has been given to this aspect of offline or desktop searching, except Apple.com  , which does many things right, a few things not; whereas the Open Source community as well Microsoft try to catch up in regards of innovation.

, which does many things right, a few things not; whereas the Open Source community as well Microsoft try to catch up in regards of innovation.

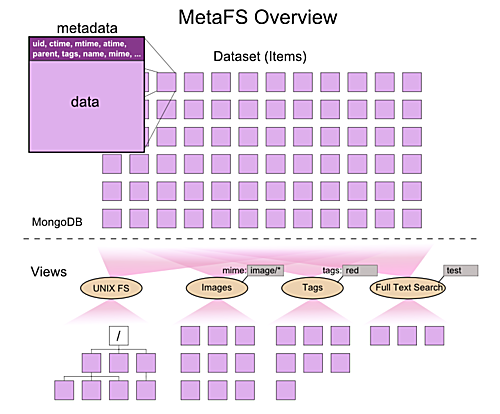

The solution is simple: make the filesystem a database. Auto-index all data, start with proper filetype based on MIME types, and properly assign helpers and indexer based on the MIME types.

Index not just strings or text, but also graphics, pixel-based but also vector-based (e.g. SVG) and music so one can find similar forms and structures in data.

With a database view or access to properly "metadata-sized" data we actually begin to understand the data we have . . . Google has done its part to provide searching capability to the internet, but how does it when I want to find a book whose title is the same as the title of a dish or name of a city - the context is part of the metadata, and it is only considered via tricks such as including a related term uniquely connects to the context one searches.

A pseudo SQL-like statement:

select * from files where mtime > -1hr and revision.app == 'gimp'

Give me all files altered the last hour by the GIMP  (Photoshop-like Open-Source image editor), or imagine this:

(Photoshop-like Open-Source image editor), or imagine this:

select * from files where image ~

and lists a photo from your vacation:

or

select * from files where image ~ circle(color=blue)

and the results look like this:

Metadata is best obtained at the moment of data creation - at that moment it needs to be preserved, stored and properly made available for searching.

Let's see what comes the next years . . .